Transferring data from one source to another is a common practice in software development. This operation is preformed in many different scenarios which includes migration of the old system to the new system, backing up the data and collecting data from different publishers. ASP.NET 2.0 includes the SqlBulkCopy class that helps to copy the data from different data sources to SQL SERVER database. In this article I will demonstrate the different aspects of the SqlBulkCopy class.

Introduction:

Transferring data from one source to another is a common practice in software development. This operation is preformed in many different scenarios which includes migration of the old system to the new system, backing up the data and collecting data from different publishers. ASP.NET 2.0 includes the SqlBulkCopy class that helps to copy the data from different data sources to SQL SERVER database. In this article I will demonstrate the different aspects of the SqlBulkCopy class.

Database Design:

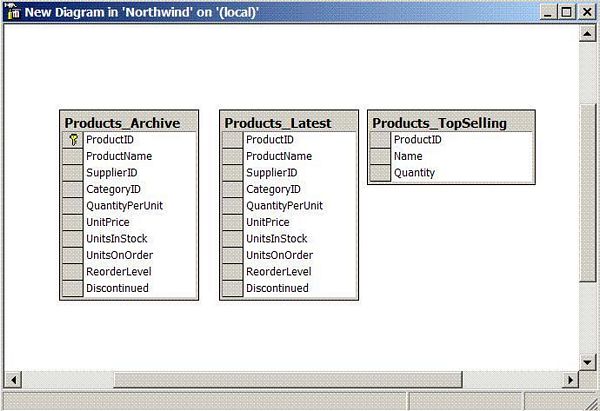

The database design is pretty simple as it is based on the Products table in the Northwind database. I have created three more tables in the Northwind database. Check out the database diagram below to have better idea.

The Products_Archive and Products_Latest have the same schema as the Products table while the Products_TopSelling table is different. I will explain the purpose of Products_TopSelling table later in this article.

The Products_Archive table contains 770,000 rows. You don’t have to worry about how the rows got there; you just need to think how to move all those rows in the Products_Latest table.

Transferring Data from Products_Archive to Products_Latest:

SqlBulkCopy contains an instance method WriteToServer which is used to transfer the data from the source to the destination. WriteToServer method can perform action of DataRow[] array, DataTable and DataReader. Depending on the situation you can choose the container you like but in most cases choosing DataReader is a good idea. This is because DataReader is a forward-only, read-only stream. It does not hold the data and thus is much faster then DataTable and DataRows[]. The code below is used to transfer the data from the source table to the destination table.

private static void PerformBulkCopy()

{

string connectionString = @"Server=localhost;Database=Northwind;Trusted_Connection=true";

// get the source data

using (SqlConnection sourceConnection = new SqlConnection(connectionString))

{

SqlCommand myCommand = new SqlCommand("SELECT * FROM Products_Archive", sourceConnection);

sourceConnection.Open();

SqlDataReader reader = myCommand.ExecuteReader();

// open the destination data

using (SqlConnection destinationConnection = new SqlConnection(connectionString))

{

// open the connection

destinationConnection.Open();

using (SqlBulkCopy bulkCopy = new SqlBulkCopy(destinationConnection.ConnectionString))

{

bulkCopy.BatchSize = 500;

bulkCopy.NotifyAfter = 1000;

bulkCopy.SqlRowsCopied += new SqlRowsCopiedEventHandler(bulkCopy_SqlRowsCopied);

bulkCopy.DestinationTableName = "Products_Latest";

bulkCopy.WriteToServer(reader);

}

}

reader.Close();

}

}

There are couple of points to mention here. First I am using the DataReader to fetch to the rows from the database table. SqlBulkCopy class object “bulkCopy” sets the DestinationTableName property to the destination table which in this case is “Products_Latest”. Products_Latest is the destination table since the data is transferred from the Products_Archive table to the Products_Latest table. The bulkCopy object also exposes the SqlRowsCopied event which is fired after the rows identified by the NotifyAfter property has reached. This means the event will be fired after every 1000 rows since NotifyAfter is set to 1000.

The BatchSize property is very important as most of the performance depends on it. The BatchSize means that how many rows will be send to the database at one time to initiate the data transfer. I have set the BatchSize to 500 which means that once, the reader has read 500 rows they will be sent to the database to perform the bulk copy operation. By default the BatchSize is “1” which means that each row is sent to the database as a single batch.

Different BatchSize will give you different results. You should test that which batch size suits your needs.

Transferring Data Between Tables of Different Mappings:

In the above example both the tables had the same schema. Sometimes, you need to transfer the data between tables whose schema is different. Suppose you want to transfer all the product name and quantity from the Products_Archive table to the Products_TopSelling table. The schema in the two tables is different as they have different column names. This is also visible in the image above under the database design section.

private static void PerformBulkCopyDifferentSchema()

{

string connectionString = @"Server=localhost;Database=Northwind;Trusted_Connection=true";

DataTable sourceData = new DataTable();

// get the source data

using (SqlConnection sourceConnection = new SqlConnection(connectionString))

{

SqlCommand myCommand = new SqlCommand("SELECT TOP 5 * FROM Products_Archive", sourceConnection);

sourceConnection.Open();

SqlDataReader reader = myCommand.ExecuteReader();

// open the destination data

using (SqlConnection destinationConnection = new SqlConnection(connectionString))

{

// open the connection

destinationConnection.Open();

using (SqlBulkCopy bulkCopy = new SqlBulkCopy(destinationConnection.ConnectionString))

{

bulkCopy.ColumnMappings.Add("ProductID", "ProductID");

bulkCopy.ColumnMappings.Add("ProductName", "Name");

bulkCopy.ColumnMappings.Add("QuantityPerUnit", "Quantity");

bulkCopy.DestinationTableName = "Products_TopSelling";

bulkCopy.WriteToServer(reader);

}

}

reader.Close();

}

}

The ColumnMappings collection is used to map the column between the source table and the destination table.

Transferring Data from XML File to Database Table:

The data source is not only limited to database tables but you can also use XML files. Here is a very simple XML file which is used as a source for the bulk copy operation.

(Products.xml)

<?xml version="1.0" encoding="utf-8" ?>

<Products>

<Product productID="1" productName="Chai" />

<Product productID="2" productName="Football" />

<Product productID="3" productName="Soap" />

<Product productID="4" productName="Green Tea" />

</Products>

private static void PerformBulkCopyXMLDataSource()

{

string connectionString = @"Server=localhost;Database=Northwind;Trusted_Connection=true";

DataSet ds = new DataSet();

DataTable sourceData = new DataTable();

ds.ReadXml(@"C:\Products.xml");

sourceData = ds.Tables[0];

// open the destination data

using (SqlConnection destinationConnection = new SqlConnection(connectionString))

{

// open the connection

destinationConnection.Open();

using (SqlBulkCopy bulkCopy = new SqlBulkCopy(destinationConnection.ConnectionString))

{

// column mappings

bulkCopy.ColumnMappings.Add("productID", "ProductID");

bulkCopy.ColumnMappings.Add("productName", "Name");

bulkCopy.DestinationTableName = "Products_TopSelling";

bulkCopy.WriteToServer(sourceData);

}

}

}

The file is first read into the DataTable and then fed to the WriteToServer method of the SqlBulkCopy class. Since, the destination table is Products_TopSelling we had to perform the column mapping.

Conclusion:

In this article I demonstrated how to use the SqlBulkCopy class which is introduced in .NET 2.0. SqlBulkCopy class makes it easier to transfer the data from a source to the SQL SERVER database.

I hope you liked the article, happy coding!